When artificial intelligence (AI) is depicted in popular culture, it’s usually in disaster movies. These days, we’re a lot more used to the idea of AI being used as a part of everyday life – from chatbots on helplines to virtual assistants like Siri. But AI is capable of much, much more.

There are different levels of AI. The most basic types of AI are given a list of rules by their operator, and they can be brilliant at applying these rules, but can’t break free of them. Examples of these are basic chatbots or computers playing chess.

Machine learning is the next level where it is able to work on things like image and pattern recognition that can’t be explained with a list of rules. These programs need to be ‘taught’ what to do and need vast amounts of data to learn from.

Despite the term meaning any computer programme that is able to mimic human behaviour or thought, AI learns in a completely different way to us. Our brains are amazingly good at recognising patterns and applying knowledge from one area to solve problems in another. Machine learning can’t replicate this.

Our research projects that involve artificial intelligence are usually using machine learning – so let’s take a look at how this works.

How a machine learns

AI is dumb – it starts out knowing nothing and understanding nothing. If you want a machine learning programme that can point out cancer cells in a microscope image, you have to train it on hundreds or thousands of images.

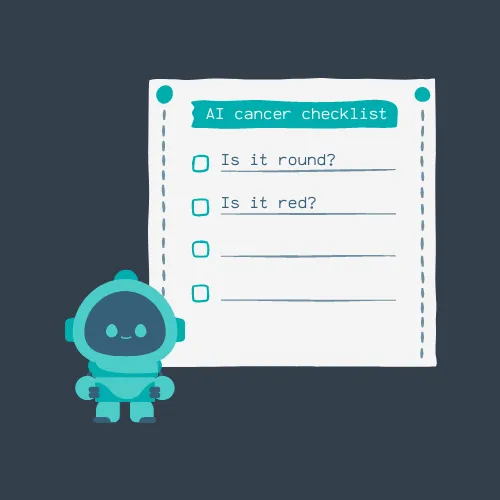

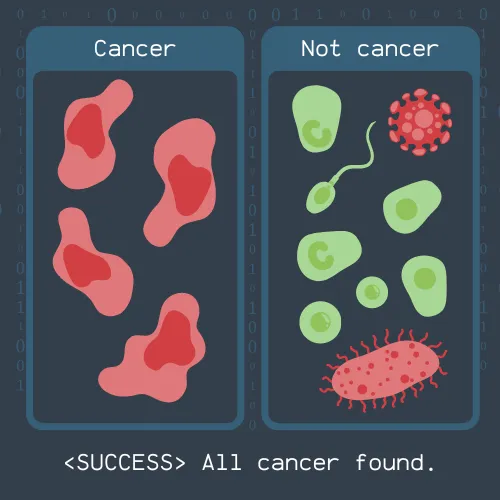

To start with, it’ll just begin to point at random bits of the picture, not knowing what to look for. When it eventually gets it right by chance, it starts to build an idea of what a cancer cell looks like. For example, it might decide cancer cells are an irregular shape. So then, it’ll point out everything that’s not round, including healthy cells. When it eventually gets another cancer cell correct, it will build on its idea – for example, determining that cancer cells are not round and, in the below example, are red.

To start with, the AI will only have a rough idea of what to look for. It won’t have found the exceptions to the rules yet.

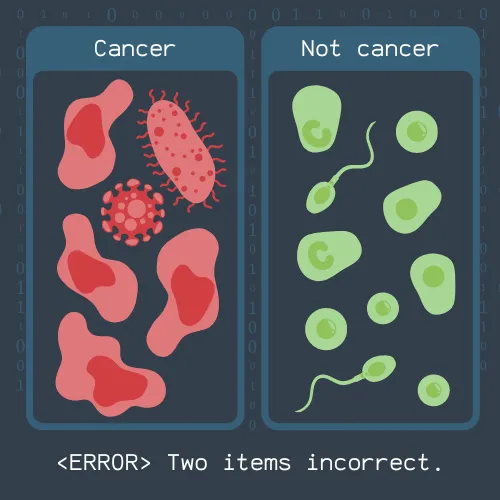

In this example, the bacteria cell and the virus are not round and are red, but are still not cancer. Once the AI is told it has got those two items incorrect, it can start to develop new rules that ensure it will be correct in the future.

Over the course of hundreds of images, it will build an accurate picture of what a cancer cell looks like through trial and error. Then, it will be exceptionally good at picking cancer cells, spotting details that human eyes may not have noticed.

Over the course of hundreds of iterations, the AI will develop a comprehensive set of rules that can successfully distinguish between cancer cells and everything else.

This example covers images, but machine learning programs can be trained on other things, like DNA sequences, healthcare data, text, video and audio.

Can it take over the world?

At the moment, AI is not sentient – meaning that it can’t think and feel like a human or understand the wider context of its actions. We don’t know when we might be able to develop AIs that are conscious and understand the world around them, because we don’t fully understand what consciousness is.

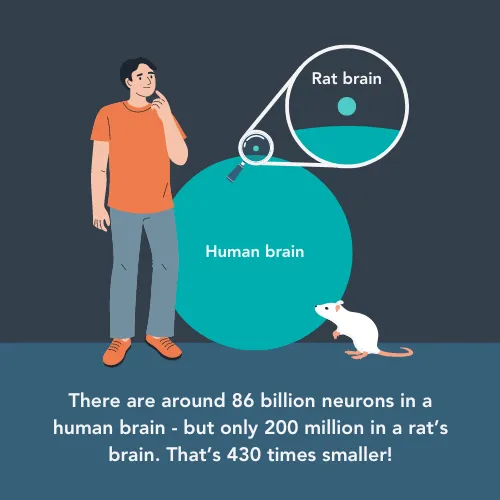

The most complicated type of AI currently available is deep learning. These programs try to mimic the multi-layered nature of the human brain – using billions of connections, a bit like neurons and synapses in our brains. Examples of this are found in self-driving cars, which need to be able to recognise unexpected objects and scenarios and respond accordingly, or large language models like ChatGPT.

Programmers have made these programs amazingly complicated and are very good at what they do. However, they are still a long way off mimicking the human brain. ChatGPT is currently one of the largest deep learning programs and has only just surpassed the complexity of a rat’s brain.

So, there is no chance that an AI which can write an essay or predict which films you’ll watch next would be able to develop the wide range of skills needed to take over the world. You can feel quite safe that AI is not out to get you – for the foreseeable future.

What about AI in healthcare research?

AI has the potential to make a huge positive impact on the healthcare industry, using its ability to spot patterns to make faster diagnoses, personalise treatments to patients' unique genetics, predict risk, and much more.

However, due to the large amounts of data needed to train AI programs, there are two main concerns. The first is around the use of personal data – whether it is being used safely, whether it is at risk of being stolen or misused, and whether that person has agreed to it being used in this way. Whilst much of this is covered by the UK’s Data Protection Act, there is still a lot of ongoing work to establish national and international standards.

The second concern is the risk of bias when using existing data. AI can potentially make incorrect conclusions about certain groups of patients, leading to misdiagnoses and differences in care. It is important to make sure any data used to train AI is fair and accurate – but this can be a considerable task as AIs need so much data to learn from.

The AI programs used in healthcare research are all types of ‘narrow AI’. This means that they don’t have any awareness of the implications of their tasks or the wider context. However, they can be amazing at their tasks, and help improve and speed up research.

The CCLG Research Funding Network has supported several projects which include AI in some capacity. Read on to find out about one of these innovative projects!

Assessing new medicines for leukaemia

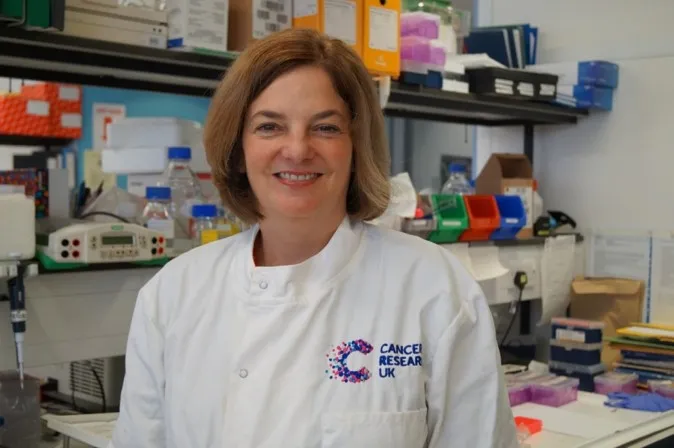

Professor Julie Irving is working on a Little Princess Trust-funded research project that uses AI but does not involve healthcare data. She aims to find new medicines for acute myeloid leukaemia (AML). It can be hard to cure this type of blood cancer in children, despite there being exciting new drugs available for the same cancer in adults.

Professor Julie Irving

Julie wants to create a combination treatment of the new drugs, called MEK inhibitors, with existing chemotherapy medicines. Her team are using AI to identify which drugs and combinations of drugs work best. She said:

We have tested 2000 drugs to see which ones will kill AML cells while they are growing on a support layer of normal cells. For this, we need to examine images of the leukaemic and normal cells after treatment with each of the 2000 drugs and count each cell type. This will tell us which drugs are most active against AML cells, but which spare the normal cells. As you can imagine, this is very time-consuming, so we used machine learning to speed up the analyses.

With not long left on the project, Julie and her team have compiled a shortlist of the best potential combination treatments. She hopes that one of these will be taken forward into a clinical trial, and may be given to patients within the next two to three years.

Julie believes AI can play an important role in the future:

"We are using AI first-hand to speed up our research, but I also think it will benefit diagnosis, disease monitoring, treatment and prevention of cancer and other diseases.

"As always, money is a limitation to using AI in childhood cancer research - and also scientists trained to use AI. However, many undergraduate and master’s science degrees are now incorporating it into their courses."

Ellie Ellicott is CCLG’s Research Communication Executive.

She is using her lifelong fascination with science to share the world of childhood cancer research with CCLG’s fantastic supporters. You can find Ellie on X: @EllieW_CCLG